When I was working at Google back in the mid 2000’s, we dealt with tens of billions of ad impressions a day, trained several machine learning models on years worth of historic data, and used frequently-updated models in ranking ads. The whole system was an amazing feat of engineering and there was no system out there that was even close to handling this much data. It took us years and hundreds of engineers to make this happen, today, the same scale can be achieved in any enterprise.

Rapid innovation in cloud data technology and exponential growth in the number of new products and companies in the data and analytics space is what’s making this possible. A subset of these tools is often referred to as the “modern data stack.”

What is a modern data stack?

A modern data stack is a collection of tools and cloud data technologies used to collect, process, store, and analyze data. All the tools and technologies in a modern data stack are designed to handle large volumes of data, support real-time analytics, and enable data-driven decision-making. It helps business users, analysts, and developers make the most out of their data.

1. Data sources:

This is where data originates. Think Salesforce, ServiceNow, HubSpot, etc. These data sources produce massive amounts of raw, unorganized data that organizations need to decipher.

2. Extract and load tools:

In order to provide value, and not live in a silo, data must be extracted from its data sources. That’s where tools like Matillion, Airbyte, Fivetran and Supermetrics come into play. These tools organize the data in preparation for the cloud data warehouse.

3. Cloud data warehouse

Sometimes referred to as a cloud data platform, a cloud data warehouse (CDW) is where your organized data is stored

4. Transformation tools

Transformation tools, like dbt, work inside the cloud data warehouse. Basically, transformation tools allow you to transform your data in preparation for analytics. The transformation layer can be done at different points during your data pipeline: extract, load, transform (ELT) vs extract, transform, load (ETL).

5. Experience and analytics

All of these tools are required in order for business users to interact with and draw insights from their data. That’s when data visualization tools like ThoughtSpot come into play.

So what makes a tool in the modern data stack modern?

1. Easy to try and easy to deploy:

Most of the tools in the modern data stack are SaaS offerings. They are available to try with a free trial or open-source. They're easy for the average user to understand, as opposed to requiring hours-long training or presentations. They are also usually well integrated with other tools in the modern data stack, so the cost of trying is not high. And they are easy to deploy in production because you do not have to worry about hosting and security.

2. Massive scalability:

Another key aspect of modern tools is that they are designed for massive scale. Partly due to the elastic nature of the cloud and partly because most of these modern systems are designed with distributed systems principles that allow for horizontal scalability instead of old desktop and single server applications (e.g. Snowflake, Databricks, ThoughtSpot.) If we double click on scalability, there are three main dimensions to consider:

Data: Can this solution have a delightful performance even when you are dealing with hundreds of billions of records or even trillions of records?

Users: Does the tool work when your user base is in tens of thousands, or if you are exposing it to external customers, possibly millions of users with high concurrency?

Use Cases: As the number and complexity of your use cases balloons, how does the tool let you manage the complexity? This is where tools built around dashboarding, cubes, and extracts fail users significantly. In each use case, each new business question results in new work that is usually a variation on the same thing that has been done many times. And then organizations end up with tens of thousands of dead dashboards and reports. This isn't just limited to just the analytics layer either. We see it in the transformation layer as well as the metrics layer.

3. Composable data stack:

The idea behind a composable data stack is that each product behaves more like a configurable component into a larger architecture, rather than its own island. In the networking space, it used to be that you configured each of your routers manually. Managing a complex network required a lot of people and it was prone to errors causing massive disruptions. Over the last 10 years, there has been a massive transformation in that space. Now everything can be configured through a control plane programmatically, changing the management of large complex networks for the better. The same thing is happening in the data space. So how do you know if a tool follows similar principles?

Benefits of using a modern data stack

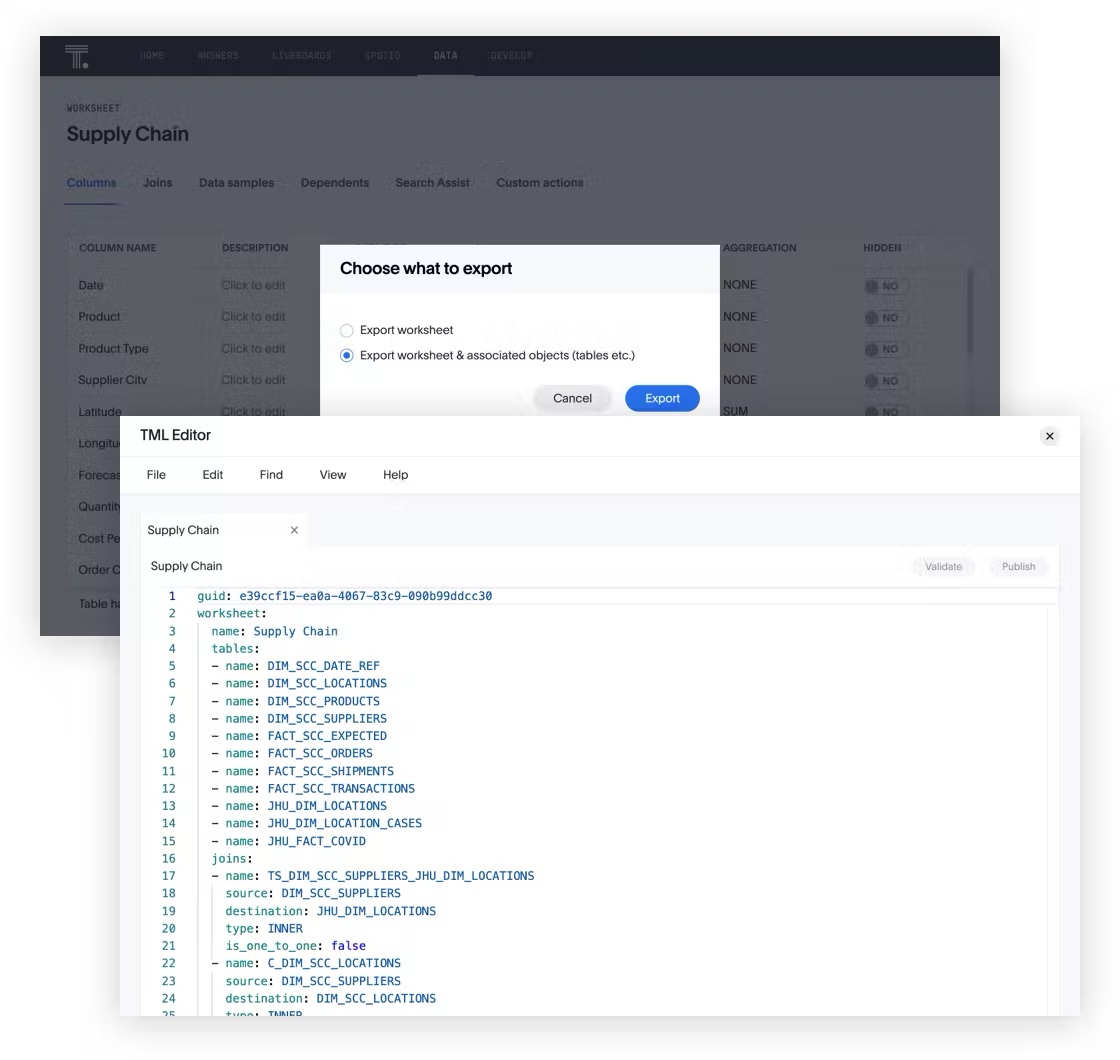

One tell-tale sign is a scriptable interface. With LookML from Looker or TML from ThoughtSpot for analytics, and dbt for transformation, there is a modern suite of tools that allow you to define everything you do with code in a configuration language. This has several benefits.

1. Version control:

This allows you to manage changes to workloads and definition of analytics workloads, so you can revert an undesired change or understand what caused a change. If you manage your configuration this way, it becomes a lot easier to manage large amounts of configuration. You can organize your code into logical units, make incremental changes together, and document the changes for posterity. If something breaks, you can easily go back to a last known good state.

2. Reuse:

You can also define things once and reuse them as many places as it makes sense. This makes it easy to create new but similar things. Bugs can also be fixed from one central place instead of many places.

3. Knowledge sharing community:

Once you can represent what users do in a system in code, it becomes a powerful tool for knowledge sharing. Looker saw tremendous growth in the developer community because there was a community of people sharing how to do different things by sharing a piece of code. And we are seeing a similar trend with TML.

4. Automation:

Once user workflows are represented in code, it is fairly easy to automate them. This allows the tool to be leveraged at a much bigger scale than previously possible. For example, we provide self-service analytics to hundreds of customers who all start from the same core data model but differ slightly in need. Before TML, this would have taken an army of people to manage. Now, it is possible to completely automate it.

Additionally, the ability to work with other tools through APIs is another key point. Today, no tool exists in isolation. Many times, data tools can be used for both their original purpose and as a building block for other products. Modern data tools do a great job of exposing not just board functional APIs but fine-grained APIs that can really help a developer bend a product to their will. This makes these tools a lot more versatile and valuable for users.

Why should you care?

The modern data stack advocates a lot of changes, but if not done right they can be painful, expensive, and risky. Most often when I talk to data stack owners in the enterprise, the question is not so much how one tool compares to another but whether they can live with the status quo or Have a strong enough desire to change. Here’s why I think change is necessary.

Greater efficiency

One of the biggest reasons why the modern data stack is winning is because most of these systems are designed with a much better standard for usability, manageability, and general human efficiency. It used to be that managing the MPP database took a team of people, now cloud data warehouses can be managed at a fraction of your team’s bandwidth. Analysts often report it takes them significantly less time to do an analysis in ThoughtSpot compared to legacy analytics tools. Modern transformation tools can manage a large number of tasks with much less human intervention.

In an episode of The Data Chief, Darren Pedroza, Senior director of Enterprise Data & Analytics at Western Alliance Bank and former VP Enterprise Data and Analytics at First Command Financial Services, shared, "The power of looking at data from a pipeline perspective as opposed to a traditional warehouse perspective has really changed our game. The role of the cloud with our data strategy is all about flexibility, agility, and democratization."

Innovation through agility

Which leads to my next point. You can tell how innovative a company is by measuring how long it takes them to go from an idea to validation (or invalidation.) If you look at the difference between the companies that are doing fast-paced innovation and their stagnant counterparts, it is not so much that the latter lacks people with great ideas. It’s that the former has a much better environment for supporting innovation. Most ideas at their inception are not great. You have to go through several iterations to get to a good one. If the cost of validating or invalidating a hypothesis is too high, quite often people don’t even get started. In today’s world, having the ability to quickly get the data that can validate or invalidate your hypothesis is not just a competitive advantage, but in some the difference between your survival or extinction.

In the words of American Express CDO, Pascale Hutz, "... Data has to be a living, breathing kind of organism. And when you have that mindset, you don't really think of data as done. Data's never finished. Platforms are never POA. They never point of arrival because there's always something else coming. And as long as you have that data mindset and… data as a product, we're keeping pace with the evolving technology and making sure that we're headed in that direction wherever that may go. Today, it's cloud, but five years from now, I bet you it's going to be something else and we just have to get ready — be ready for it.”

Velocity

As a startup with few employees and customers, everyone can be lean with almost any toolset. But as you start seeing hyper-growth, complexity in your data stack hits faster than you might expect. Scaling data is a relatively easier problem to solve – just throw more money at it. But scaling unneeded complexity traps you in a world of technical debt and frustration. I have seen many mid-size companies maintain hundreds of dashboards, supported by hundreds of transformation jobs, without knowing which ones are delivering value and still battling a month-long backlog of requests to serve businesses with data. And let me tell you, it doesn’t end well.

That was also the case for John Hughes, the Modern Milk Man’s Chief Strategy Officer. The company took a risk when it devoted nearly a quarter of its original funding to modernizing its data platform. But after seeing his share of inefficient legacy technology at larger operations, John was determined to ensure that this startup was equipped with the most effective modern data tools for the job. Thanks to this investment, the company is now able to market more intelligently to customers, forecast operational gaps, and quantify environmental impact.

How to adopt the modern data stack

Shift your mindset

The modern data stack is more than a change in tools, it’s a change in mindset and culture as well. Cloud data warehouses like Snowflake and Google BigQuery offer architecture and pricing that is designed for you to grow. You can store as much history as you want and respond to new data requests in an agile way. This shatters many walls that existed before.

Similarly, self-service analytics tools like ThoughtSpot encourage analysts to focus on strategic things like improving the data model and empowering business users to ask and answer their own data questions. Augmented BI tools encourage business users to explore deeper into why instead of chasing the what.

Any transition to a modern data stack will not yield the desired results unless there is a desire within the organization to change how it operates.

Go with the best-of-breed

The industry tends to go back and forth between choosing the best solution for each layer of the stack and choosing the best ecosystem. Usually, when there is a lot of innovation in a space, best-of-breed works much better than vertical integration. When the space matures, some of the big technology vendors tend to catch up on the innovation (mostly through acquisitions) which drives even more efficiency through integration. Right now definitely seems like a good time for selecting the best tool for each stack. While each of the cloud infra providers (e.g. AWS, GCP, Azure) has their own tools in each stack, they care a lot more about customers choosing their cloud and having a good experience than winning at every layer. As a result, they have been fairly open and supportive of anyone who builds a great experience for their customers.

Build incrementally and vertically

Like most big changes, the best way to do this is to build a path with a lot of small wins along the way instead of a big change. Sometimes I see people building their data stack horizontally, meaning they want all their data stored in the cloud before they even think about transformation or analytics. This approach means you won’t be having as much incremental business value along the way. It is much better to pick one vertical (e.g. Product Experience or Sales Operations) and build an entire stack that delivers value right away. In my experience, the winning formula seems to be: quick win, rinse, repeat.

Don’t let perfect be the enemy of good

This is more for data democratization than anything else. I often see teams hesitate to deploy modern tools that will empower business users because they are afraid users may shoot themselves in the foot. While this is a reasonable concern, this is not a new problem and many teams have figured out how to do it in a well-governed way. The other fear is often that my data is not clean enough. One of the best ways to fix your data problems is to shine a bright spotlight on them and allow users to identify the problems for you.

Parting thoughts

The modern data stack is here to stay. It will evolve, improve, and get significant adoption. The ones that adopt it right will be in a much better position to delight their customers and build an innovative and growing business. If you are in the process of building your own data stack, you’re on the right track. And if you have to transition, just remember to choose a path with lots of incremental wins.

ThoughtSpot provides powerful search and AI-driven self-service analytics tools that make it easy for you to find and create insights. Ready to get started? Start your free trial of ThoughtSpot today!