Let’s be honest: AI isn’t actually coming for your job. But it might be making the wrong decision on your behalf.

The explosion of AI in business tools, especially analytics, is exciting but can also be deeply risky. From hallucinated insights to silent bias, AI is no longer just answering questions. In some cases, it’s acting on them. That shift has real consequences.

This isn’t a thinkpiece about killer robots or the singularity. It’s about the very real risks that show up when you ask AI to power decision-making, without questioning how those decisions are made, where the data comes from, or whether you’re still in the loop.

Let’s break down the biggest dangers of AI, what they mean in practice, and how you can avoid them.

Table of contents:

We’re not talking about futuristic humanoid bots. We’re talking about systems often powered by large language models (LLMs) or machine learning that can generate answers, surface patterns, and even trigger actions based on data.

Some AI tools write code. Others summarize dashboards. Increasingly in the analytics space, we’re seeing AI agents that don’t have to wait for you to ask questions; they can monitor your data, spot anomalies, recommend next steps, and act.

That’s a big leap. And it makes the question of AI safety a lot more urgent.

The risks of AI aren’t theoretical; they show up in your everyday decisions, tasks, and tools, often without you noticing. A flawed insight here, a biased recommendation there, and before you know it, you’re making calls based on outputs you can’t trace, from systems you don’t fully control.

Here’s what those AI risks actually look like when it is embedded into your analytics stack:

1. Hallucinated insights

Generative AI is confident even when it’s wrong. LLMs are trained to predict likely text, not verify facts. In analytics, that means your AI agent might confidently generate an output that sounds right, but has no connection to your actual data or is entirely fabricated. That’s an AI hallucination. And if that fake insight ends up in a board presentation or automated workflow? You’ve got a problem.

2. Hidden bias

AI inherits the flaws of its training data. That includes bias around race, gender, geography, or historical behavior. Even if you’re feeding in your own enterprise data, any models layered on top can introduce skewed assumptions, and those biases can shape recommendations, predictions, or customer-facing actions.

3. Black-box decision making

AI can give you answers without showing its work. If you can’t trace how it got there, you can’t validate it, reproduce it, or defend it. And in industries like finance, healthcare, or government, that’s not just risky, it’s noncompliant.

4. Automation without oversight

AI is evolving from a tool into a decision-maker. But giving autonomous agents too much freedom without clear thresholds or controls can lead to misguided actions, like flagging the wrong customer segment for churn outreach or triggering inventory changes based on bad data.

5. Data misuse and leakage

Some AI tools learn from what you type, even if that includes sensitive customer info, internal KPIs, or proprietary logic. Without clear boundaries, AI can accidentally memorize and surface confidential data outside your org, especially if it’s a consumer-facing LLM.

6. Security vulnerabilities

Prompt injection, model spoofing, and adversarial attacks are real concerns. A malicious actor could manipulate an AI system’s output or trick it into revealing restricted data. If your analytics layer isn’t secure, you’re adding a new attack surface without knowing it.

⚡ How are your peers making AI accurate and trustworthy? Watch this webinar to get their proven strategies.

Most conversations about AI safety happen after something goes wrong. But if you’re using AI in your analytics stack today—whether for summarizing dashboards, writing SQL, or triggering actions—you’re already in territory where mistakes have real consequences.

AI safety isn’t just a technical concern. It’s something every data team, product leader, and business stakeholder should care about, because it shapes how AI behaves right now.

With agentic analytics, where AI doesn’t just assist but can act autonomously, the stakes are even higher without proper guardrails in place. For instance, an agentic analytics use case might involve adjusting marketing spend in real time based on campaign performance—powerful, but potentially risky if not monitored or constrained correctly.

💡 Curious to see how global data leaders are navigating AI safety? Check out Cindi Howson's 10 must-read books on AI and data for leaders in 2025.

Here’s what responsible AI should look like when it’s driving decisions, not just describing them:

1. Grounded in real data, not generative guesswork

What does it mean:

LLMs are great at generating human-like language. But on their own, they don’t actually know anything. That’s why they “hallucinate” - they’re just predicting the next word, and can easily make up numbers, charts, or sources.

Why it matters:

If your AI analytics tool is disconnected from real-time, governed data, you risk acting on completely false insights delivered with total confidence.

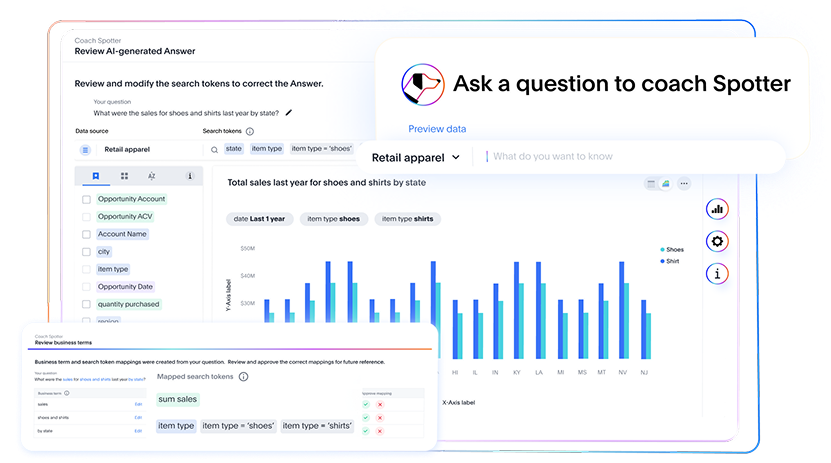

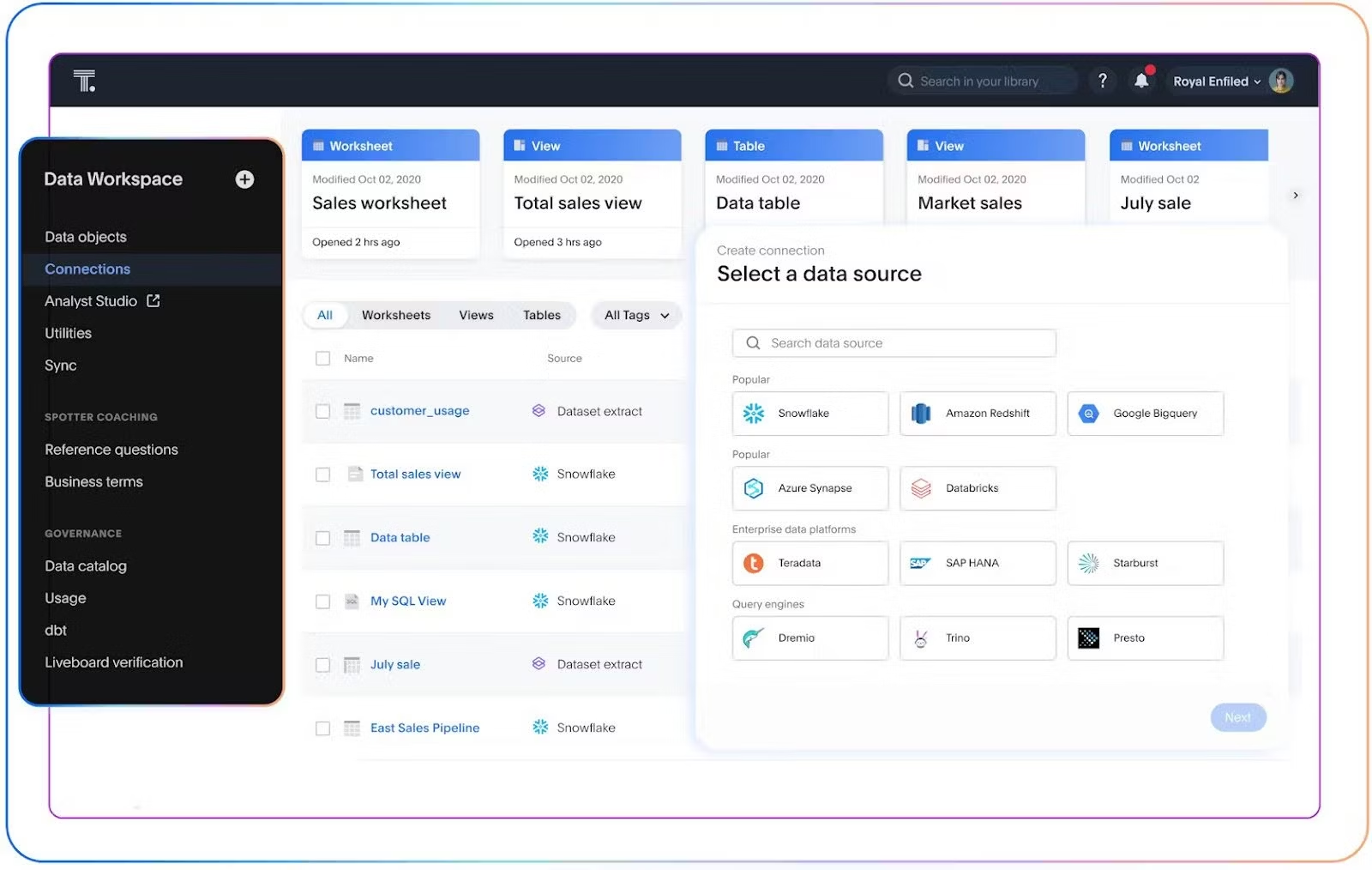

ThoughtSpot’s Spotter, your AI agent, is always grounded in your actual cloud data. Whether you're using Liveboards, search, or automated monitoring, the results are based on your governed sources, not AI-generated estimates. You always stay connected to the truth.

2. Explainability and traceability, so you can trust every step

What does it mean:

Explainability means you can understand how an AI system arrived at its output. Traceability means you can follow the full lineage, from data source to logic to output.

Why it matters:

If AI generates a KPI or recommends action, you need to validate it. Especially in regulated environments, “the AI said so” doesn’t cut it.

AI-augmented dashboards in ThoughtSpot show the steps behind every insight, chart, or recommendation, whether it’s AI-assisted SQL, a drill-down analysis, or an automated anomaly alert. You can view the logic, see the filters, and audit the outcome.

3. Strong governance, by default

What does it mean:

Governance isn’t just about who can access data. It’s about ensuring AI systems respect those boundaries and operate within them, every time.

Why it matters:

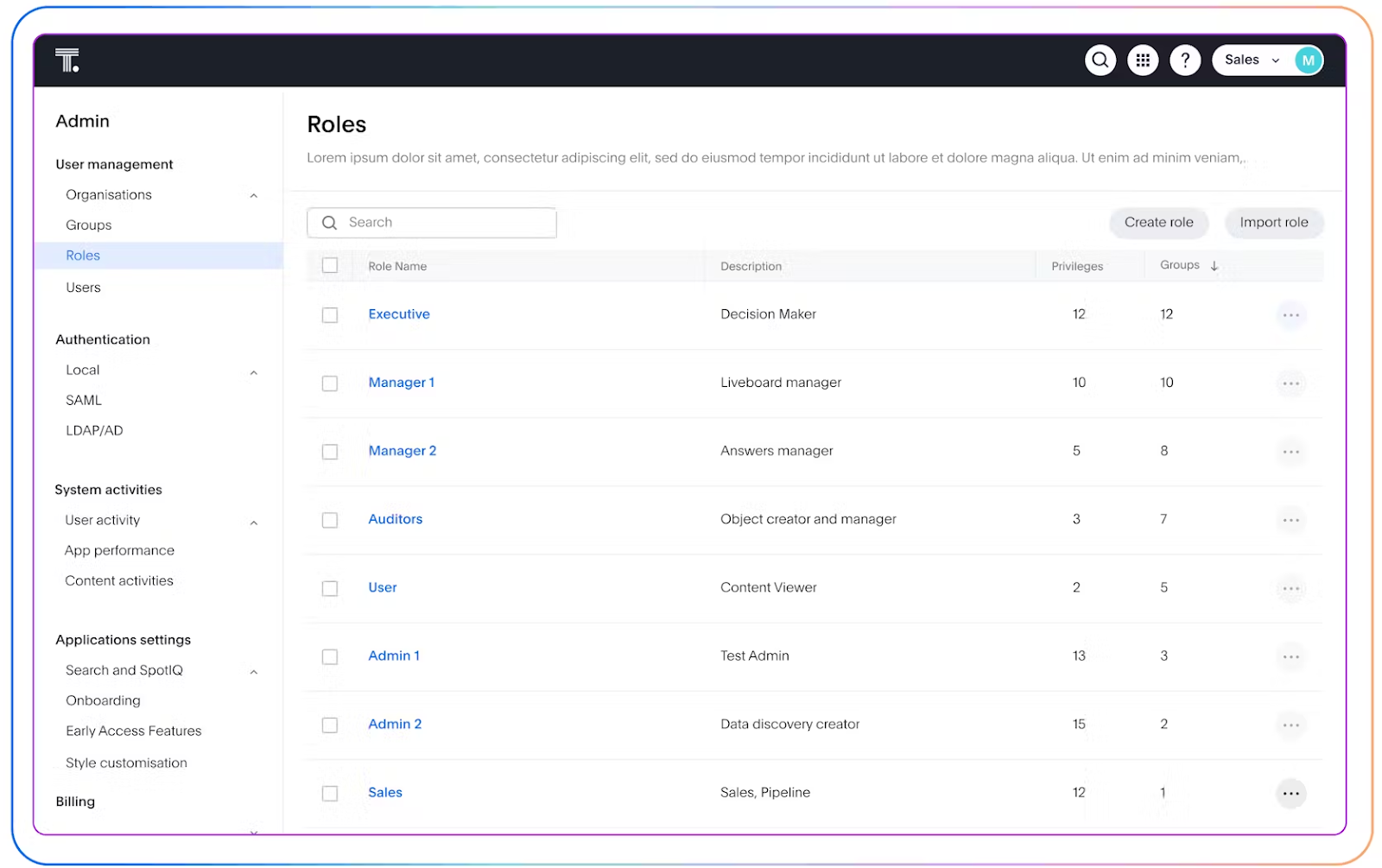

An AI agent that can access all your data regardless of role, context, or security policy isn’t smart. It’s dangerous.

ThoughtSpot is built with enterprise-grade governance: role-based access control, row-level security, and native integration with your cloud data permissions. Agents and AI features operate within the same guardrails, so they can’t “leak” insights across teams, departments, or geographies.

4. Human-in-the-loop, always

What does it mean:

AI shouldn’t replace human judgment; it should enhance it. A responsible system is built with a human-in-the-loop approach, giving you the ability to review, adjust, or override what the AI recommends before anything happens.

Why it matters:

Full automation without oversight is risky. You want AI to be proactive, but not autonomous to the point of making irreversible decisions without human oversight.

In ThoughtSpot, AI agents can surface anomalies, forecast KPIs, or trigger alerts, but they don’t take action blindly. You remain in control. You can define thresholds, review flagged issues, or pause automations before any action moves downstream. With the right agentic analytics platform, your AI can act with you.

💡 See how ThoughtSpot leaders keep human insight at the forefront while turning bold ideas into AI innovation.

5. Built for enterprise environments

What does it mean:

Most generative AI tools were designed for individual users (think ChatGPT). Enterprise AI has higher stakes around compliance, data protection, uptime, and auditability.

Why it matters:

If you’re applying consumer-grade AI tools to business-critical workflows, you’re accepting a level of risk those tools were never built to handle.

ThoughtSpot was built for AI-native BI. It doesn’t just plug into your cloud ecosystem: it respects your data architecture, security policies, and user roles. The platform also lets you plug your own LLMs into governed workflows, so you get the benefits of GenAI without giving up control.

At its root, AI safety isn’t just about avoiding worst-case scenarios. It’s about making sure your systems are designed to support clarity, context, and control at scale. Platforms like ThoughtSpot aren’t bolting these principles on after the fact—they’re built into how the Agentic Analytics Platform works, right from the foundation.

That’s what makes AI not just powerful, but actually usable in the enterprise.

The best way to stay ahead of AI risks is to ask better questions before letting it drive your business decisions. Here are a few to start with:

1. Where does this AI system pull its data from, and is it up to date?

Outdated or incomplete data can lead to faulty insights and misguided actions. Before you let any AI system make decisions on your behalf, ask what data sources it connects to, how often that data is refreshed, and whether it reflects the latest business reality. Real-time action requires real-time inputs.

2. Can I trace the steps behind every insight or action?

If the AI recommends or initiates something like pausing a campaign or changing inventory levels, can you see why? Auditability matters, especially when you're held accountable for the outcome. You need a clear, human-readable explanation of how the AI got from input to action.

3. What governance controls are in place?

Who can access, change, or override the system? Can teams set policies, thresholds, or approvals? Governance isn’t just a safety net; it’s the foundation for using AI responsibly in regulated or complex environments. Your platform should support granular controls, versioning, and monitoring.

4. Who’s accountable if the AI gets it wrong?

Mistakes are inevitable, but what matters is how they're handled and who is ultimately responsible. Is there a clear owner for reviewing incidents? Is it logged? Does the platform provide a way to learn from misfires and prevent them in the future? If accountability is unclear, AI risk increases exponentially.

5. Can a person review or reverse decisions made by the system?

Even if AI acts autonomously, human oversight should always be present. Can someone intervene before a decision is executed, or quickly roll it back if needed? This matters not just for trust, but also for adapting to edge cases or business changes.

The future of analytics isn’t just about speed or volume; it’s about making the right call at the right time. As AI begins to take action on your behalf, you need more than black-box predictions or vague recommendations. You need systems that explain their logic, adapt to your business context, and give you the final say.

ThoughtSpot was built for this shift. With agent-powered workflows, transparent decision paths, and enterprise-grade governance, you get more than insights; you get intelligent action you can trust.

See how it works in practice. Book a demo and explore agentic analytics built for business.

Dangers of AI FAQs

1. What does it mean when AI “hallucinates” insights?

AI hallucination is when a system generates information that sounds correct but has no basis in your actual data. In analytics, this could mean made-up KPIs, charts, or trends, something that can easily slip into dashboards, reports, or decisions without being noticed.

2. How does bias creep into AI-generated analytics?

Bias often comes from the data AI models are trained on, whether that's public internet data or historical enterprise data. If that data reflects past inequalities or blind spots, the AI can reinforce them in its outputs, affecting predictions and recommendations.

3. What happens if AI takes the wrong action on my behalf?

Autonomous AI agents that act without oversight can do real damage, flagging the wrong customer segment, changing pricing or inventory, or pausing campaigns based on faulty logic. The danger of AI increases if you’re not in the loop to catch or correct it.

4. What makes these risks hard to spot?

AI outputs often look polished and convincing, even when they’re wrong. And many analytics teams assume “AI means better.” That combination of overconfidence and invisibility makes dangers like bias, hallucination, and misuse especially easy to miss.