The GPT euphoria got doused with some reality recently as Samsung employees realized they were sending false information to customers and Italy outright banned ChatGPT. The hype and concerns further accelerated last week with the godfather of AI, Hinton, resigning from Google, President Biden summoning AI leaders to Washington, and several stocks nose-diving on the threats generative AI poses to their business models. I see these setbacks as normal teething problems for disruptive technology and remain optimistic about the ways that GPT will profoundly reshape the data and analytics landscape for the better.

First mover advantage and imagination matters

As I think back on key disruptions in the data and analytics industry across my 30 years in this space, much of the disruption has been incremental. So, it was normal for vendors and customers alike to take a wait-and-see approach. There’s a mindset in enterprise IT: never adopt a dot zero release. It’s software after all, and software can be buggy. But let’s think back to two major industry shifts: the internet and the cloud.

Back in 1999, I had just left my job at Dow as a global BI leader to get my MBA. I did an independent research project on how the Internet would reshape the BI industry in easing distribution of software and increasing adoption. As we know, floppy discs are now collector’s items and the internet is foundational for a digital economy. My final exam for marketing that year was on whether or not the Yellow Pages should pursue an internet strategy. My thesis was, “yes, and first mover advantage matters.” My professor gave me a C and when I asked him to explain the low grade, he ,declared dial-up was too slow and that the Internet would never be mainstream. We may chuckle in hindsight now, but there are times that some cannot see past the current state of technology. To be fair to my professor, he lacked a networking and technical background to understand how quickly browsers and high speed internet were progressing.

More recently, while cloud data platforms were taking off starting in 2012, I recall in 2020 some customers still refusing ever to move to the cloud, often due to misplaced fears about security and loss of control and the classic “not invented here” syndrome. The pandemic accelerated many organizations’ move to cloud. Those that had already started on their digital transformation and cloud journeys have shown two to three times the revenue growth of laggards, with an 81% potential profit improvement.

I think back to a remark from one COO about the data and analytics industry that rather than a talent shortage, we lack imagination. I agree with his assessment, and it is precisely right now that we need more imagination on how to leverage generative AI for revolutionary, rather than incremental, improvements in data and analytics. We are at a seminal moment with generative AI in which first movers will have an outsized and lasting competitive advantage over followers. First movers who act recklessly and without education and guardrails will get burned. Those that embrace generative AI while respecting AI ethics and explainability will be the winners. Here are four ways generative AI like GPT will change the data and analytics industry.

GPT will change the entire data and analytics workflow.

1. Data capture and operational processes

Digital interactions have enabled consumers to shop online more intelligently, and search interfaces have helped match customers and products. But AI assistants are about to get a boost as smarter agents lighten the load for overworked operational workers, Historically, many chat bots were rules based. So if you call your doctor or visit their website, a bot may follow a rigid prompt process, limited to scheduling an appointment or refilling a prescription. With generative AI, this bot could now respond to first-line medical questions and home care options. Human-in-the-loop is essential, but answers from nurses are significantly accelerated. If the nurse recommends an in-person doctor visit, all the information and symptoms can readily populate the electronic medical record.

Ultimately, I expect internal and external data to be more seamlessly fused and spanning semi structured and synthetic data. For example, in retail, when a business user asks, “How likely is Cindi to buy our latest product,” they see a picture of a Cindi-like avatar, along with images and details of past internal purchases, along with external demographic data, credit card patterns, and a propensity score.

2. Data modeling and meta data

With the rise of the data mesh, ownership of data products is shifting to lines of businesses. Higher domain expertise along with low code products are enabling this shift. And yet, the need to connect to data, cleanse, and transform it for analytics currently requires a high degree of technical skills. The ability to use GPT and other LLMs to generate reasonable code for such tasks will enable smarter, low-code experiences.

Business meta data in particular is an onerous and laborious task. As important as data catalogs are for ensuring a common understanding of business terms, populating descriptions and fields with such meta data is just one reason research from the Eckerson Group shows low adoption of data catalogs. The ability for GPT to generate synonyms and explain how things are calculated is an area where I expect significant improvement.

ThoughtSpot Sage for example, is using GPT to generate synonyms for the data model. Atlan, the cloud-native modern data catalog, recently announced Atlan Co-Pilot, expected to launch mid June.

Calculating metrics within a data model or within analytics tools requires learning a precise syntax distinct to each platform. Ensuring parentheses are in the right place and if the platform uses single quotes or double quotes is different in every tool.

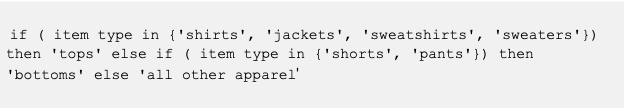

For example, if one wanted to create a formula to group certain products, using IF-THEN-ELSE, in ThoughtSpot, the values are in single quotes:

Using LookML, the code would look like this:

A similar formula in Excel would use double quotes for each value, and a slightly different syntax. In straight SQL or in metrics layers such as dbt, one might use a CASE statement to accomplish the same output. GPT can be an amazing accelerator here.

3. Democratization of insights

In 2007, I wrote that the power of search would enable everyone to access data, a vision based on the need I saw in the industry and some innovations in various startups. It would take another seven years for ThoughtSpot to release its first product. In the last three years, the shift to accessing cloud data platforms via live query and the release of the Modern Analytics Cloud has broadened our breadth of data and distribution. Search, while an easier interface than visual discovery tools designed for experts, requires some learning of the syntax. For example, business users could enter a search term, “Weekly Sales Swimwear Last Quarter.” Some elements in the search terms use indexed data (swimwear), while others (weekly and last quarter) use the intelligence in ThoughtSpot to simulate natural language.

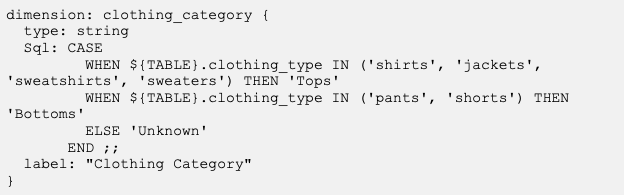

ThoughtSpot Sage, which combines GPT’s natural language processing and generative AI capabilities with ThoughtSpot’s patented search technology, further enhances the naturalness of the way a question can be posed, “Show sales for bathing suits last quarter by week.” GPT will automatically convert the text “bathing suits” to “swimwear” and ignores “show me.” I also could have said “show me revenues” and ThoughtSpot Sage would understand that revenues and sales are synonyms, as GPT understands this; in the past, a modeler would have had to explicitly define revenue as a synonym for the sales field.

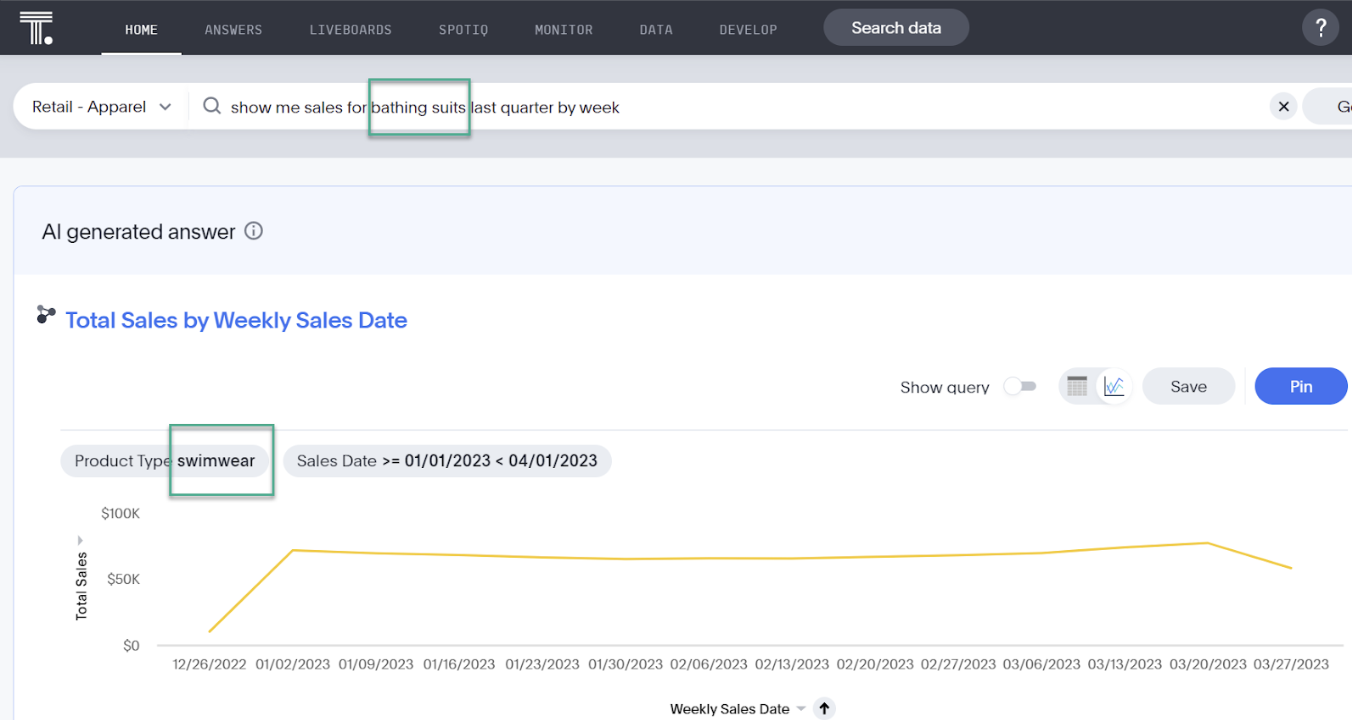

SQL Generated:

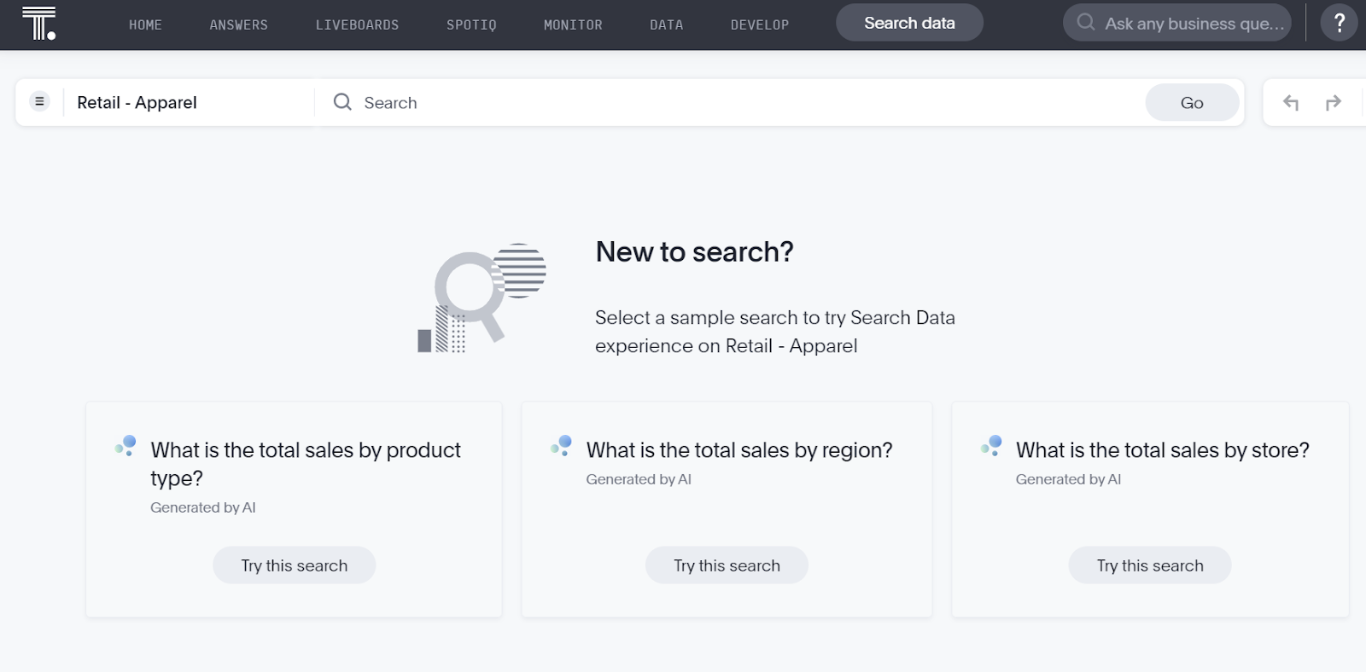

Not only is the ease of searching amplified with GPT, but the ability to propose possible ways of searching also makes data more approachable as ThoughtSpot Sage starts with three AI-suggested questions.

4. Data literacy

As we wrote in our annual top trends e-book, improving data literacy and data fluency is now a goal of data-savvy CEOs. And yet, many will confuse technical literacy with data literacy. A foundation of data literacy is understanding key business terms and analytical concepts. I expect LLMs to move from training on public data sources to training on company proprietary data sources. Bloomberg is one of the first to announce its own LLM trained on financial data, and Truveta and John Snow Labs on healthcare data. With this, the ability for AI to facilitate a common language and accelerate onboarding of new employees and upskilling will be huge. According to a recent DataCamp survey, 40% of organizations lack a specific budget to launch a formal data literacy program, even though organizations that have done so show improved decision-making and customer service. Applying AI here is a cost-effective way to deliver in-app, in-context, training.

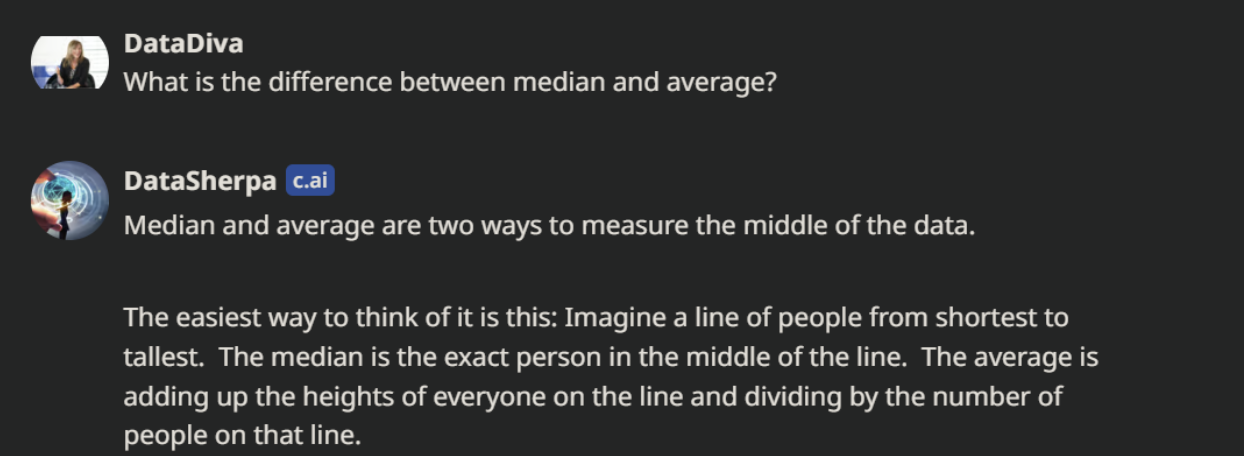

Further, only 21% of business people feel confident in their data literacy skills while 54% of organizations say they have a data literacy skills gap. Nobody likes to feel stupid, and being able to ask an AI-bot, versus a potentially intimidating and overworked data expert may reduce this friction point. As an example, I recently created a bot named Data Sherpa using Character.ai (in beta). Here is what Data Sherpa had to say about median versus average.

With domain specific LLMs, such bots will be an ideal way to improve data fluency to enable users to ask things like, “What does OTIF stand for?” (on time in full) or “how does our company define customer?” (does this include free subscribers or only paid customers). The latest release of GPT’s ability to also work with image data could enable someone to upload a complex Sankey diagram and generate natural language text.

“What if?” and “What could go wrong?”

Now is the time to imagine “what if” and re-assess the whole analytics workflow from data capture to insight to action. It also is a time to imagine what could go wrong and to ensure there is a human in the loop with every AI interaction as well as AI ethics. While some may wish to pause the AI race, instead, I see now as the moment to lean in and scenario model the good as well as the bad. Just as cybersecurity professionals engage with ethical hackers, we need such hackers to envision all the possible ways AI can be abused and build safe guards to minimize these risks I think back to other seminal moments in our industry and think how Blockbuster no doubt wishes it had taken the Internet more seriously. Ditto for how much Oracle, once the undisputed market leader for relational databases, has been steadily losing marketshare to the likes of Snowflake and Google in the pursuit of cloud data platforms.

To see and hear how generative AI is reshaping the industry, tune into Beyond on demand.