Every day, AI systems and AI agents are making decisions about your loan applications, job interviews, and healthcare treatments. But what happens when these systems get it wrong, show bias, or can't explain their reasoning?

Responsible AI isn’t just an ethical checkbox; it’s how you build systems your users can trust.

Here’s a breakdown of the five principles and practical steps every leader should take to make AI work for people, not against them.

What is responsible AI (and why now)?

Responsible AI is a framework for developing artificial intelligence systems that are ethical, transparent, and accountable to the people who use them. It goes beyond just making algorithms work. In simple terms, it’s how you make sure your models don’t just perform well, but do the right thing.

Unlike traditional AI development that focuses primarily on technical performance, responsible artificial intelligence integrates ethical considerations from the very beginning. It starts at the design phase, weaving fairness, privacy, and accountability into every step.

The push for responsible AI practices has become urgent because AI systems are already shaping life-changing decisions in hiring, lending, healthcare, and even criminal justice. When those systems go wrong, it’s not just a data issue; it’s a trust issue that can cost your brand, your customers, and your credibility.

Building responsibly means combining:

Ethical guidelines: Standards that make sure your AI systems treat all users fairly and without discrimination

Governance structures: Clear oversight and accountability mechanisms for AI decision-making

Technical safeguards: Built-in protections that prevent harmful or biased outcomes

Transparency requirements: Processes that make AI decisions understandable and explainable

Why does responsible AI actually matter?

AI responsibility starts long before you build a model: it starts with how you manage data. As Dr. Cindy Gordon put it on a recent Data Chief podcast episode,

"Every leader must understand that they have a responsibility for data management. It's an underlying skill that we really have to build in all of our college, university, and high school programs...In order to ever get AI right, we've got to solve the data challenges."

That’s the foundation of responsible AI. When you put the right practices in place, you're not just checking a compliance box: you're building systems people can trust. It’s what allows your organization to adopt AI at scale without compromising on ethics, accuracy, or accountability.

What’s at stake with responsible AI: Compliance, trust, and credibility

The stakes are high. Regulatory penalties for noncompliance can reach millions, and reputational damage from biased or opaque AI systems can take years to repair. When employees or customers lose trust in your technology, adoption stalls and your entire AI strategy falters.

A responsible approach, on the other hand, builds credibility and gives you a competitive advantage. It shows your stakeholders that your AI decisions are fair, transparent, and grounded in reliable data.

That’s the kind of trust Verivox built when they embedded ThoughtSpot into their B2B platform. Their teams had been struggling with slow time-to-insight and limited visibility into performance data.

But once they put transparent, governed analytics directly into their workflows, adoption skyrocketed. Teams began monetizing data, and instant insights became the new normal, all while maintaining full trust and control over the process.

| Without Responsible AI | With Responsible AI |

|---|---|

| Risk exposure: Lawsuits, regulatory fines, brand damage | Protected operations: Compliance-ready, trusted brand |

| Limited adoption: User mistrust, employee resistance | Widespread use: High user confidence, employee buy-in |

| Reactive approach: Fixing problems after deployment | Proactive strategy: Preventing issues before they occur |

The 5 principles you need to know about responsible AI

Responsible AI isn’t just a mindset, it’s a discipline. These foundational principles guide how you can build AI systems that serve your users while protecting your organization from risk.

1. Fairness and bias prevention

Fairness means your AI systems treat all users equitably, regardless of race, gender, age, or other characteristics. Bias often slips through skewed training data or flawed algorithms, creating unfair outcomes that can damage both users and your reputation.

You have to continually identify and address these sources of unfairness before they become problems. This requires ongoing vigilance, not just a one-time audit.

Data auditing: Regular reviews to make sure your training data represents all user groups fairly

Algorithm testing: Evaluate model performance across demographic segments

Diverse teams: Involve people with different perspectives to challenge assumptions

Continuous monitoring: Track real-world performance to catch bias early

2. Transparency and explainability

People need to understand how and why AI systems make decisions. Transparent AI models provide clear explanations for their outputs, making it easier to identify errors, biases, or unintended consequences.

Black box models that can't explain their reasoning undermine trust and make accountability nearly impossible. When something goes wrong, you need to understand why it happened and how to fix it.

3. Privacy and security

Responsible AI also means responsible data handling. Your AI systems must protect sensitive information and comply with regulations like GDPR and CCPA. AI introduces unique security risks, such as adversarial attacks designed to fool models or data leakage from training sets.

Privacy-preserving techniques like data anonymization and differential privacy help minimize these risks while keeping your systems compliant with evolving regulations.

4. Accountability and governance

You need clear ownership structures for your AI systems. Define who is responsible for AI-driven outcomes, establish governance committees, and document your decision-making processes thoroughly.

Audit trails and comprehensive documentation provide traceability and help you demonstrate compliance to regulators when questions arise.

5. Safety and reliability

AI systems should perform consistently and safely, even under changing conditions. That means rigorous testing before deployment and continuous monitoring afterward.

Fail-safe mechanisms, like human-in-the-loop controls, add an important layer of protection against unintended consequences. Ongoing risk assessments help maintain reliability as your data and operating environments evolve.

Regular performance monitoring and risk assessments maintain reliability as your data and operating conditions change over time.

As JoAnn Stonier shared on the Data Chief podcast,

"My hope is that more and more companies, organizations, academics, and governments are beginning to understand that [AI] is a whole new field that needs human intervention now..."

How to implement responsible AI in your organization

Moving from principles to practice takes a clear, actionable plan. These five steps provide a roadmap for building AI systems you can trust.

Step 1: Assess your current AI systems

Start by cataloging every AI system currently in use or development across your organization. This inventory helps you spot gaps in your policies and prioritize where to focus your efforts first.

Assessment checklist:

AI inventory: Document all existing AI systems and their purposes

Risk evaluation: Identify potential harms and benefits for each system

Stakeholder mapping: List all groups affected by your AI decisions

Compliance review: Check alignment with current and upcoming regulations

Step 2: Establish governance and accountability structures

Create an AI governance committee to oversee your responsible AI program. Define clear roles so everyone knows who is accountable for what, and establish escalation procedures for addressing ethical concerns. This structure provides formal oversight and a clear path for resolving issues before they become crises.

Step 3: Create responsible AI policies and guidelines

Develop comprehensive policies that translate your high-level principles into practical guidelines for your teams. Standardize documentation templates and deployment checklists to maintain consistency across all teams and projects.

A governed data foundation supports policy enforcement effectively. The ThoughtSpot Agentic Analytics Platform helps you build a semantic layer that standardizes business definitions and applies governance rules directly to your data.

When your AI systems access information through this layer, they automatically inherit your responsible AI policies rather than requiring separate enforcement mechanisms.

Step 4: Implement monitoring and measurement systems

You can't manage what you don't measure. Define clear metrics for your responsible AI goals and set up tools to track them continuously.

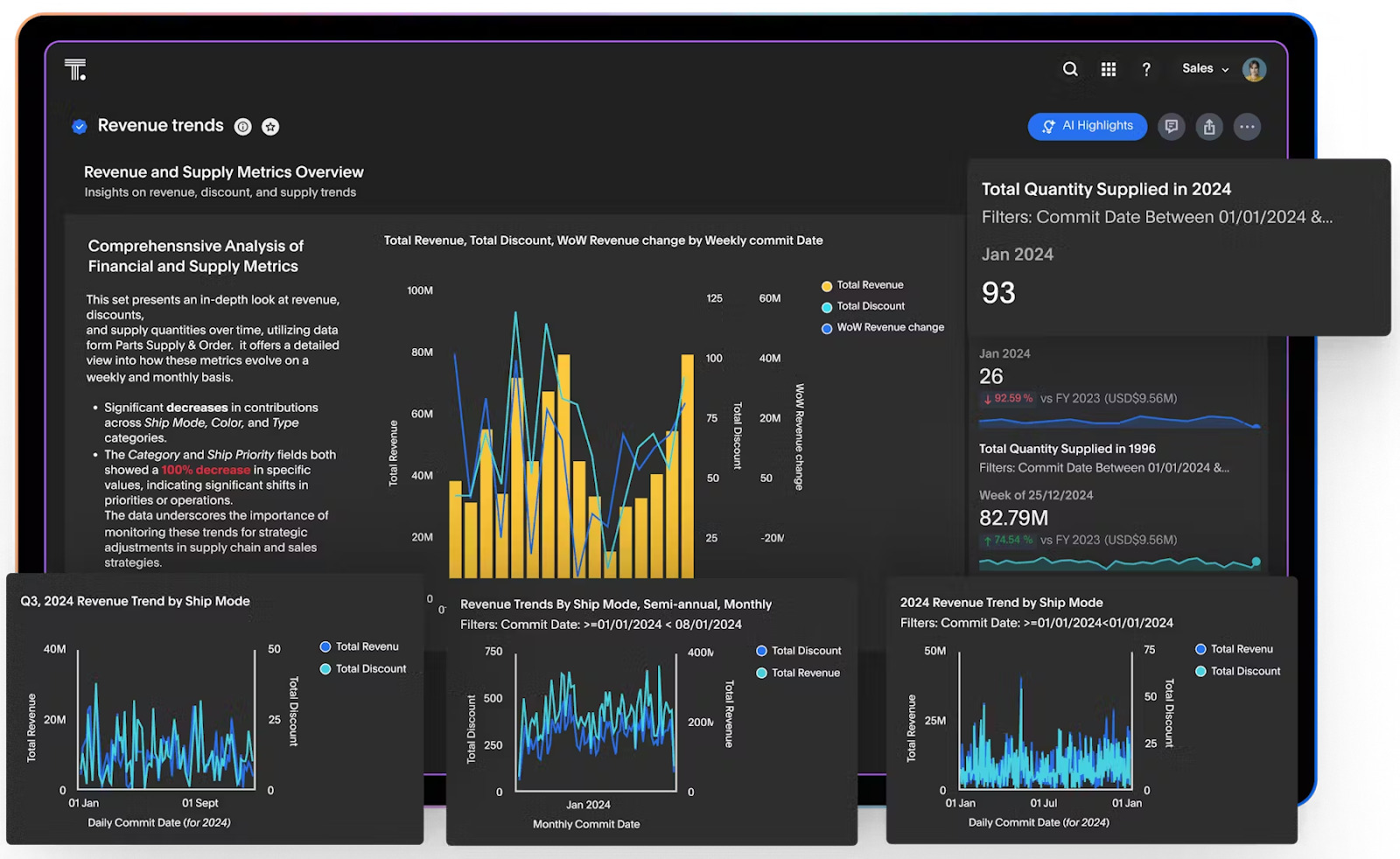

With ThoughtSpot’s interactive Liveboards, you can monitor AI performance and responsible AI KPIs in real time. This gives you instant visibility into how your systems behave, helping you spot and address deviations before they impact users.

Step 5: Build continuous improvement processes

Responsible AI is an ongoing commitment, not a one-time project. Create feedback loops that let users report issues, schedule regular policy reviews, and use findings to update your AI strategy.

Scale what works, refine what doesn’t, and keep adapting as your data, models, and business needs evolve.

Ready to put responsible AI into practice? See how ThoughtSpot's governed, transparent AI analytics platform helps you build trust and drive adoption. Start your free trial today.

What tools and frameworks support responsible AI implementation?

You don’t have to start from scratch to implement responsible AI. Several established frameworks already provide a structured path for managing AI risks and achieving compliance..

The NIST AI RMF offers comprehensive guidance for organizational approaches, while ISO/IEC 23053 focuses on international compliance standards. The EU AI Act provides specific requirements for European operations.

Alongside these frameworks, technical tools for bias detection, model validation, and performance monitoring help operationalize your responsible AI principles. Modern analytics platforms play an equally important role by providing governed data access and transparent insights into how AI systems make decisions.

You can see this approach in action with the Spotter, your AI Analyst. Its ‘glass-box’ design shows exactly which data sources were used to generate an answer and how the system arrived at its conclusions.

Unlike traditional BI tools that often function as black boxes, Spotter makes every step explainable. This transparency is fundamental to responsible AI practices, giving you the explainability needed to build trust with users and meet regulatory requirements.

| Framework | Focus Area | Best For |

|---|---|---|

| NIST AI RMF | Risk management | Comprehensive organizational approach |

| ISO/IEC 23053 | AI trustworthiness | International compliance alignment |

| EU AI Act | Regulatory compliance | European market operations |

How to measure responsible AI success

Measuring success requires looking beyond just financial returns. You need to track the cultural impact on your organization and the relevance of your AI systems to your business goals.

Key metrics to track:

Trust indicators: User confidence scores and adoption rates for your AI tools

Fairness measures: Demographic parity and equal opportunity metrics across user groups

Transparency scores: Documentation completeness and model explainability ratings

Compliance tracking: Audit pass rates and incident response times

Business impact: Revenue growth, cost savings, and efficiency improvements tied to responsible AI

Together, these measures help you assess not only how well your AI performs, but also how responsibly it operates and whether your teams and customers actually trust it.

Put responsible AI to work in your organization

Responsible AI doesn’t slow innovation: it accelerates it. When users trust your systems and regulators approve of your practices, you can scale AI adoption across your organization with confidence.

With ThoughtSpot, responsible AI isn’t an afterthought; it’s built into the foundation of your analytics. Transparent reasoning, governed data access, and explainable insights give you the power of AI-powered analytics while maintaining the trust and accountability your organization needs.

Start your free trial today to see how responsible AI can drive better outcomes for your business.

Frequently asked questions about responsible AI

How is responsible AI different from ethical AI?

Responsible AI encompasses ethical principles but adds practical governance frameworks, implementation processes, and measurable accountability mechanisms that turn ethical ideals into operational reality.

What are the current legal requirements for responsible AI?

Legal requirements vary by region but commonly include data protection laws like GDPR and CCPA, industry-specific regulations, and emerging AI-focused legislation such as the EU AI Act.

How long does it take to implement responsible AI practices?

You can establish basic frameworks in three to six months, but building a mature, responsible AI program typically takes 12 to 18 months, depending on your organization's size and complexity.

What's the return on investment for responsible AI initiatives?

Returns come through reduced legal risks, higher user adoption, improved brand trust, and operational efficiencies, with studies showing 20-30% higher success rates for AI projects that follow responsible practices.

Do I need a formal responsible AI framework if I'm in a small organization?

Yes, smaller organizations benefit significantly from building responsible AI practices early, as this helps attract customers and investors who prioritize trustworthy AI while preventing costly mistakes as you scale.