Today, the rate of innovation around data processing has accelerated beyond what any of us previously thought possible. Databricks recently announced their Databricks SQL offering, which is the next step in this evolution and builds on the foundation of Delta Lake to deliver interactive analytics at scale. This offering pairs with ThoughtSpot’s Modern Analytics Cloud to empower everyone in an organization to find answers to their questions with simple access to the data lake.

What is a lakehouse?

The concept of a data lakehouse has been around for a few years now. Ultimately it relates to the convergence of data warehouses and data lakes, driven by the rapid changes in technology on both ends of the spectrum. Traditionally, data warehouses had very limited storage capacity and were used to store only the most essential business data. As a result, data lakes emerged as dumping grounds for various other types of data, and their expansiveness required highly specialized skills and tools to explore and extract value. Data lakehouses were born to enable the types of analysis previously limited only to data warehouses at the scale of the data lake.

Even with the flexibility of a data lakehouse, we’ve learned that enabling search on top of a data lake first requires that the data be organized for search. While a data lake by definition contains high volumes and varieties of data, search-based analytics requires a data set that is structured for SQL and optimized to provide the response times users expect from consumer solutions like Google. Delta Lake provides such a structure, offering an ACID-compliant storage layer optimized for performant queries and the ability to curate “Gold” tables that store aggregated business level data ready for analytics. The addition of Databricks SQL provides a compute layer designed specifically to support interactive queries.

Making your data lakehouse accessible to all

The combination of Databricks SQL and ThoughtSpot allows organizations to move beyond internal analytics and provide data apps within their own product offerings. The scale and flexibility of Databricks, along with its pay-as-you-go model makes it an ideal data platform for data apps. Additionally, the newly-released features of ThoughtSpot Everywhere makes it easy for developers to build an interactive data app on your data lakehouse.

While every organization has a unique collection of data, the steps for getting started and deploying ThoughtSpot on top of Databricks are the same whether for internal use cases or as part of a data app. Read on to learn how.

Step 1: Organize the data within Delta Lake for search

Search in ThoughtSpot can be made even more effective when data sets are optimized for search based analytics in “Gold” tables optimized for search-based analytics. With support for ACID transactions and schema enforcement, Delta Lake provides high-quality, reliable data that maximizes the performance benefits of search in ThoughtSpot.

Step 2: Create a Databricks SQL endpoint within your Databricks environment

A big part of the equation in running queries is providing adequate compute power to execute them within the desired timeframe. At the same time, compute power is a cost driver in consumption-based platforms like Databricks. Right-sizing your Databricks SQL endpoints is key to providing the desired query performance while maintaining cost-efficiency. At ThoughtSpot we also strongly recommend enabling Photon, Databricks’ Apache Spark-compatible vectorized query engine, designed to take advantage of modern CPU architecture for extremely fast parallel processing of data, in order to provide enhanced query processing.

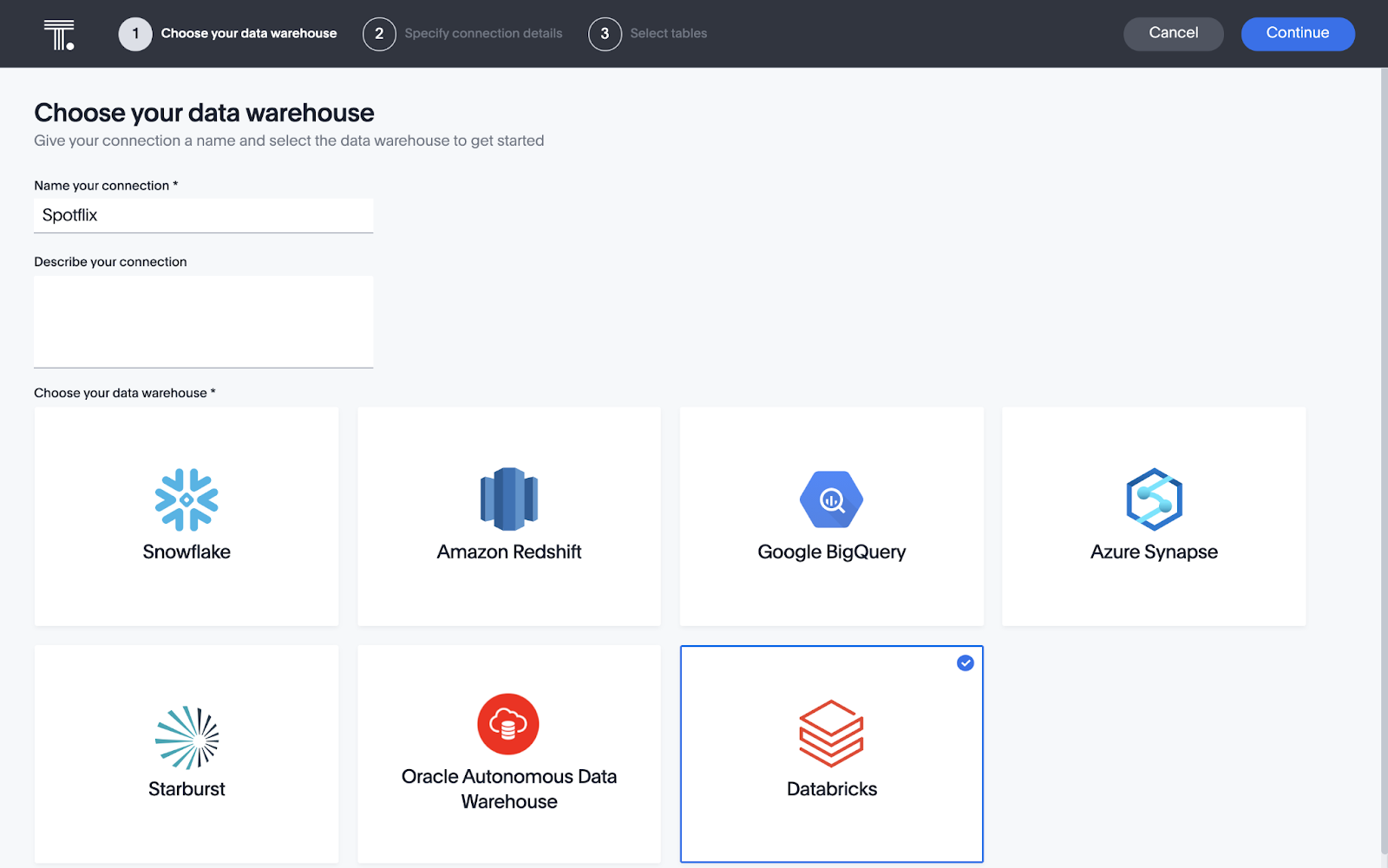

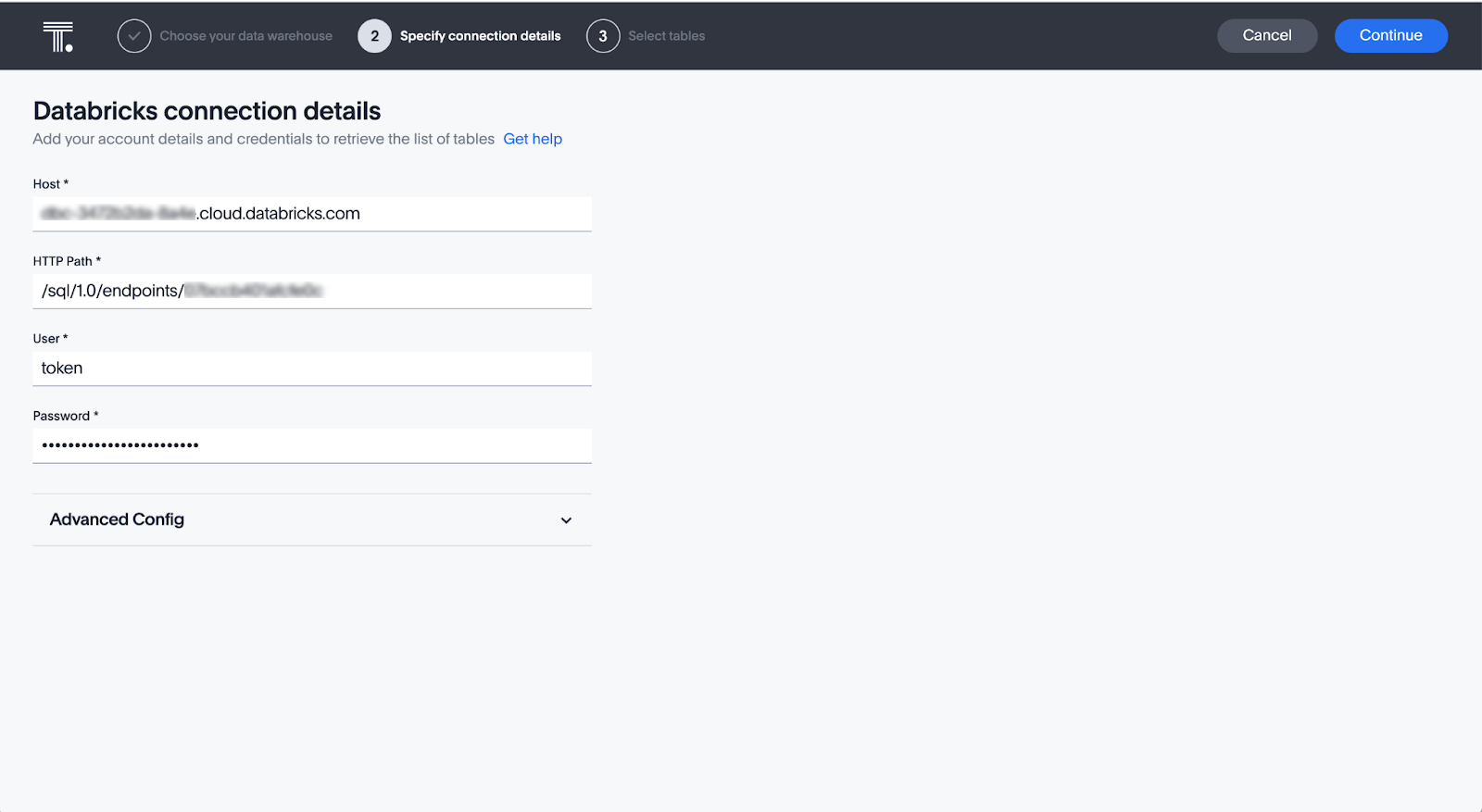

Step 3: Connect ThoughSpot to your endpoint

The ThoughtSpot Connections page allows users to create connections to leading cloud data platforms, including Databricks. A Thoughtspot connection is created by entering the details (Hostname and HTTP Path) for a Databricks instance with login credentials. You can use either a username and password or a personal access token for increased security. If you use a personal access token then enter the token as the Username.

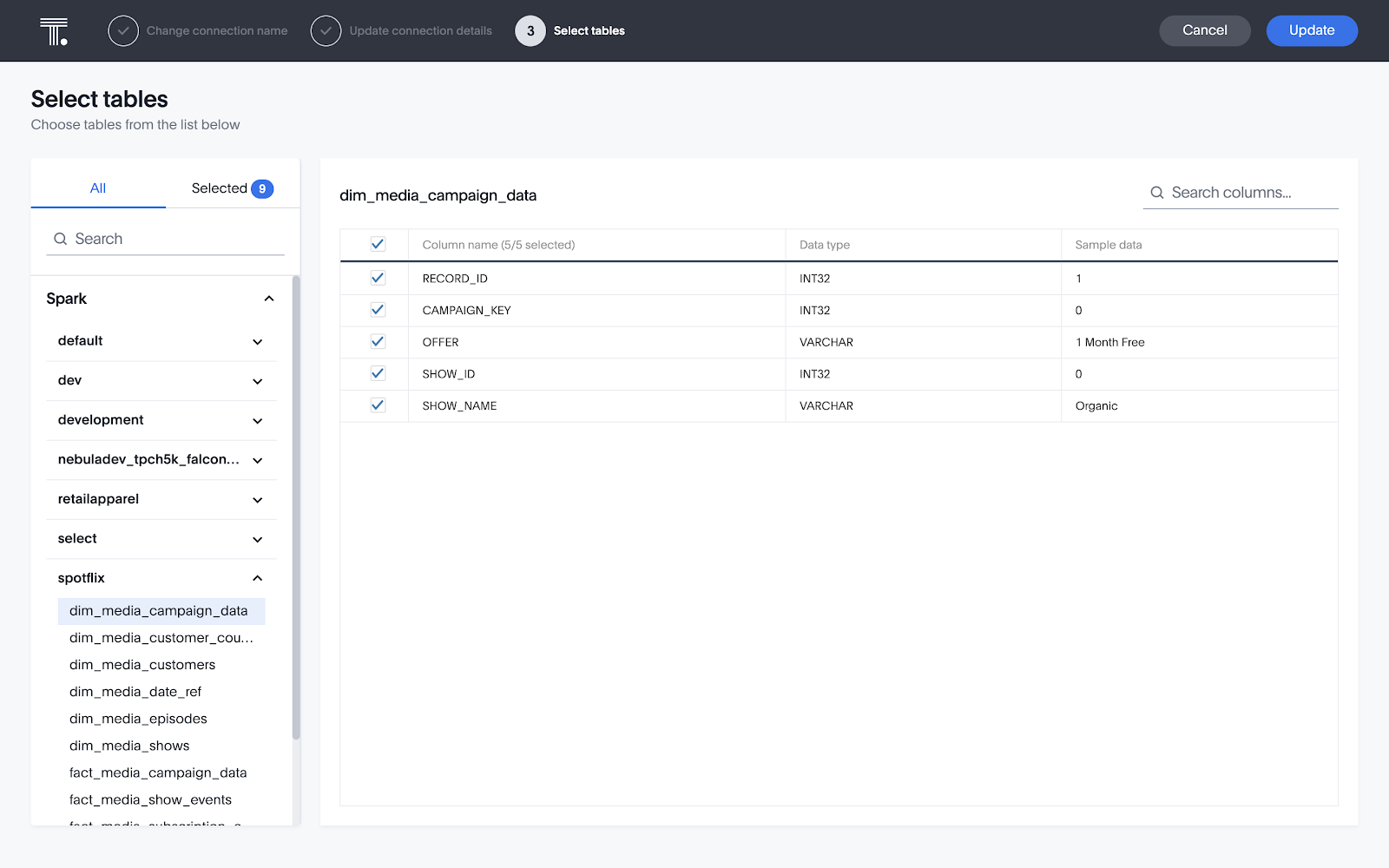

<br>Step 4: Select the tables you want to make available for search

Once you’ve entered the connection information, ThoughSpot will display all the databases your user credentials have access to within the Databricks instance. Tables can be added to the connection by clicking on the table name in the left pane and then selecting the top checkbox to select all columns in the table. You can also select a subset of the columns, but in most cases we recommend simply including all the columns in each table. Since ThoughtSpot is only able to join tables within a connection, you’ll want to include all of the tables for each use case in a single connection.

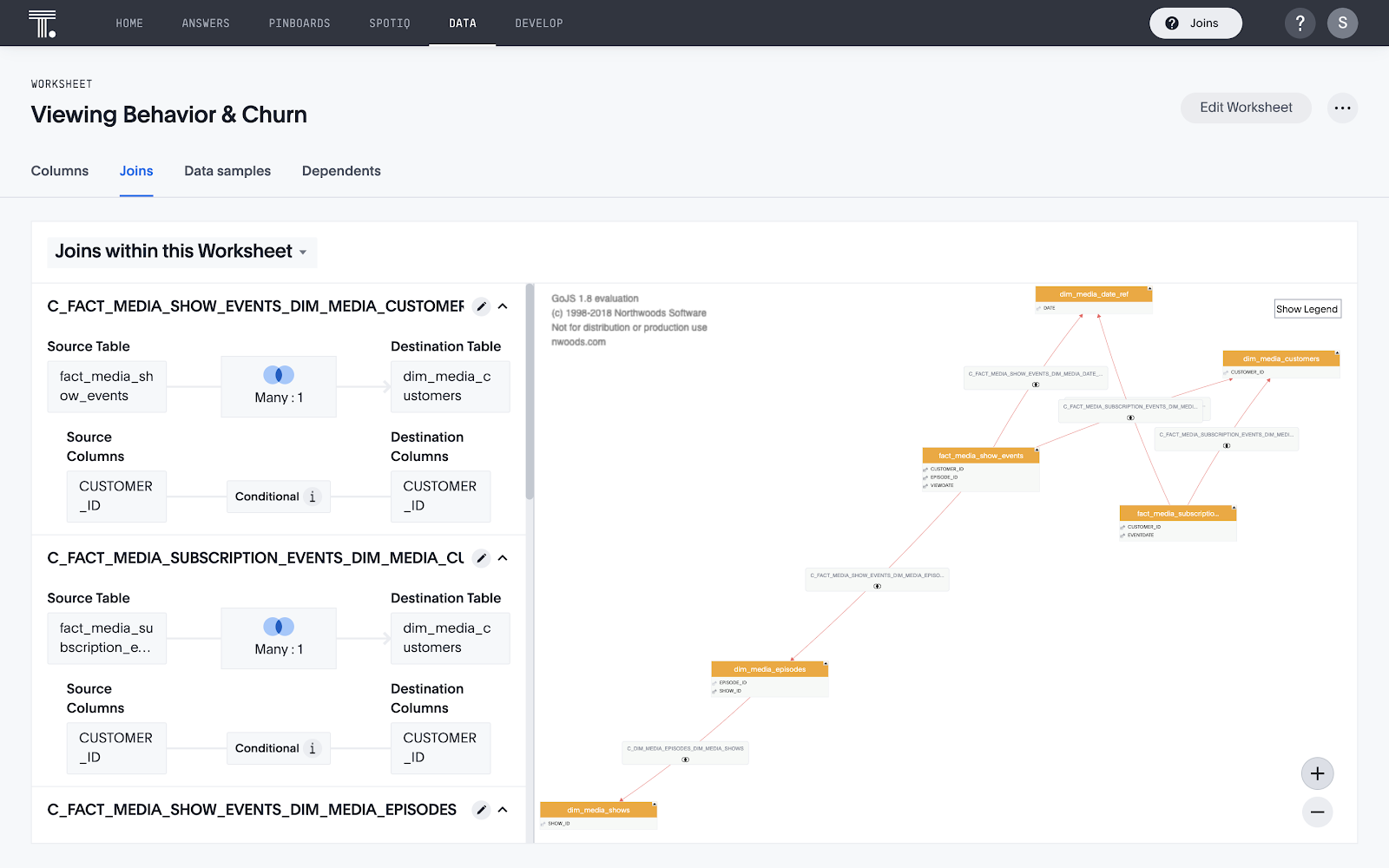

<br>Step 5: Define the joins

Once the connection has been created, you will want to create joins for users to search across multiple tables. A connection can span multiple databases and a search can include all of the tables in a connection for which a join path exists. This flexibility allows for data from multiple subject areas to be combined to provide analysis across all your data.

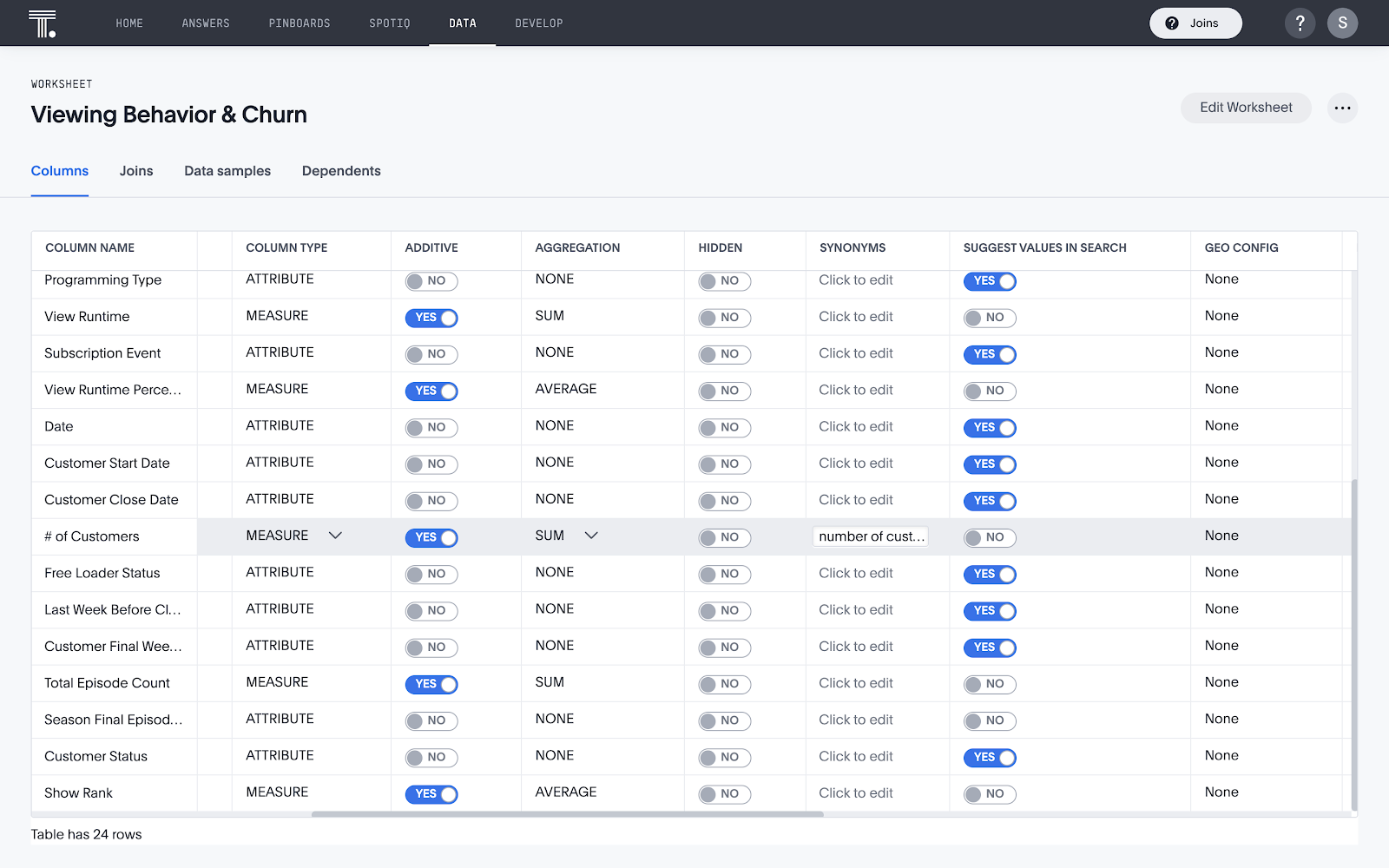

<br>Step 6: Build a Worksheet

The more complex your data model, the harder it can be for business users to interact with it. Worksheets in ThoughtSpot are a powerful tool for simplifying the way end users access complex data. With a Worksheet, users can search across multiple tables without worrying about joining the tables or dealing with varying granularities within the data. Worksheets also offer a way for advanced users to insert synonyms and create functions for advanced calculations.

<br>Putting data into the hands of business users

Now you’re ready to share the Worksheet with users and unleash the Modern Analytics Cloud on your data lakehouse. Together, ThoughtSpot and Databricks open up new possibilities and new ways to get insight from your data.

If you’re curious about trying ThoughtSpot on your own Databricks instance, reach out to the ThoughSpot sales team today to give it a try.

If you’re attending the Data + AI Summit, you can also see ThoughtSpot in action on Wednesday, May 26 at 5:00 - 5:30 pm PT. Register today to save your seat!