In the last article, we learned how transformer neural networks work and how ChatGPT is a transformer trained on language modeling tasks. We began talking about how as these transformer-based language models get large, a very interesting set of properties show up. This is true not just for ChatGPT but other similar models such as Bloom and PaLM.

In this module, we will talk about what are these emergent properties and what are their implications for the real world. We will also look at how researchers from OpenAI in designing ChatGPT went beyond training a transformer model by training those models to learn from human feedback. Finally, we will look at some of the limitations of ChatGPT as well as the implications of ChatGPT in the real world.

While the GPT family of models has certainly led the way for training and exposing larger and larger models there have been several other large language models trained with billions of parameters (e.g. Bloom from HuggingFace, PaLM from Google) that have produced some very interesting properties once they’ve crossed a threshold of somewhere between 50 and 100 billion parameters. This is a great document compiling research on the emergent properties of LLMs.

Let's look at the most interesting properties seen in GPT-3

Remember in the examples below the text with clear background is the prompt and the text with green background is the response from GPT-3.

Zero-shot learning:

This is when a model successfully solves a problem that it was not explicitly trained on. For example, you ask for completion for the text “what is 5 + 3" and the response says 8.

Few-shot learning:

This is when the model doesn’t initially know how to solve a problem but works out a solution based on a few examples. Note that all of this is happening through a mechanism that was just designed to predict the next words in a sequence of words. For example here is a prompt specifying how to detect sentiment:

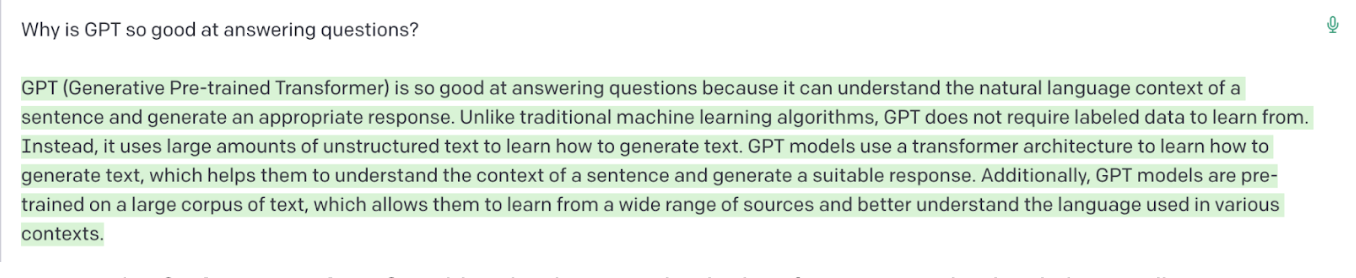

Question answering:

This is when GPT composes information, as opposed to merely retrieving it like a search engine:

Code generation:

One thing that has surprised a lot of computer scientists is how well GPT can generate code based on instructions in natural language. It’s not perfect, but as an assistive tool, it is proving to be a step change in the state of the art. You can find blogs where someone has generated an entire e-commerce website with code generated from GPT invocations.

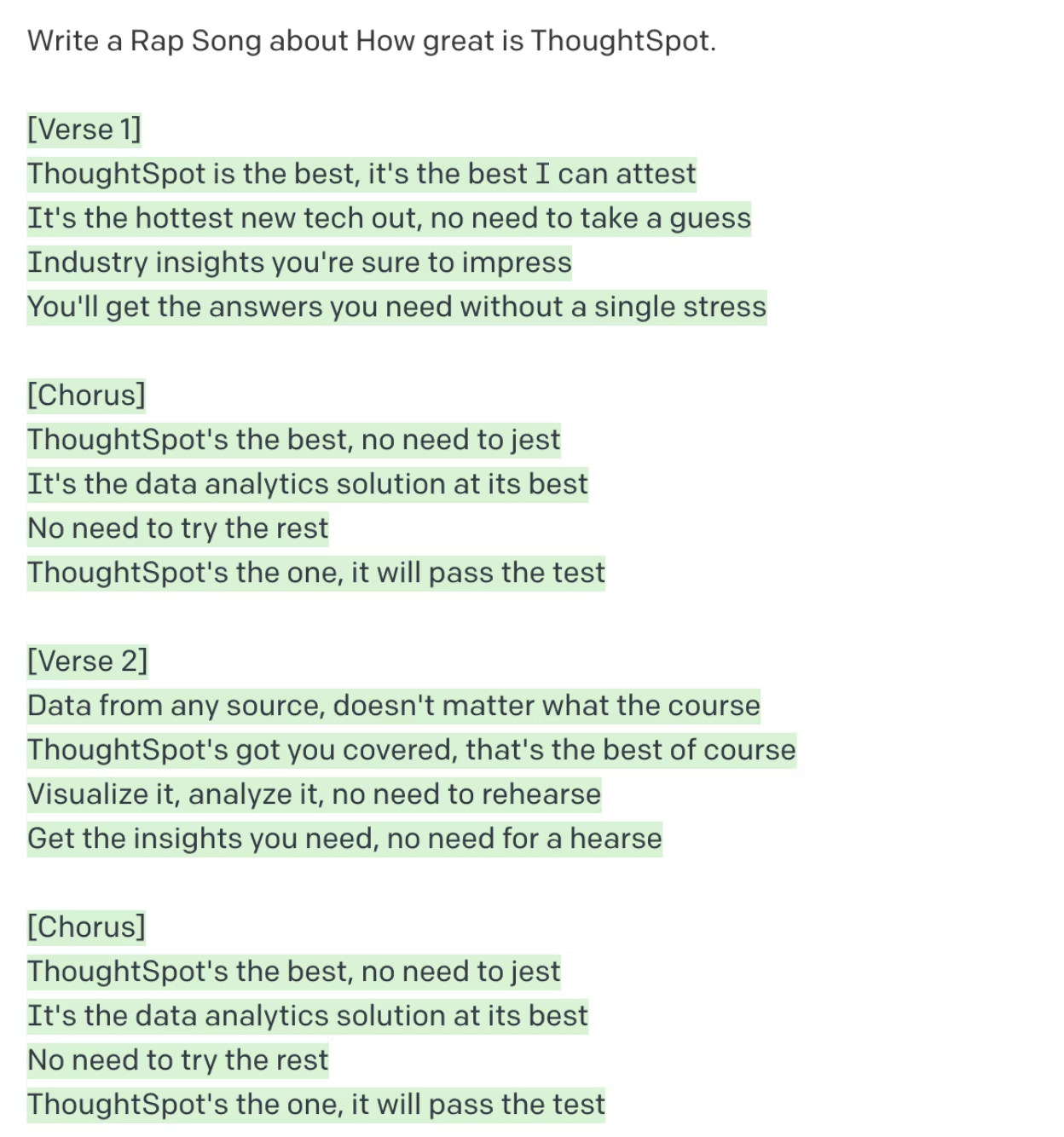

If you are not into generating code, a very similar task that has taken the Internet by storm is to ask GPT to generate prose or poetry in a particular style for a particular topic. And its ability to compose arbitrary styles with arbitrary topics is really uncanny:

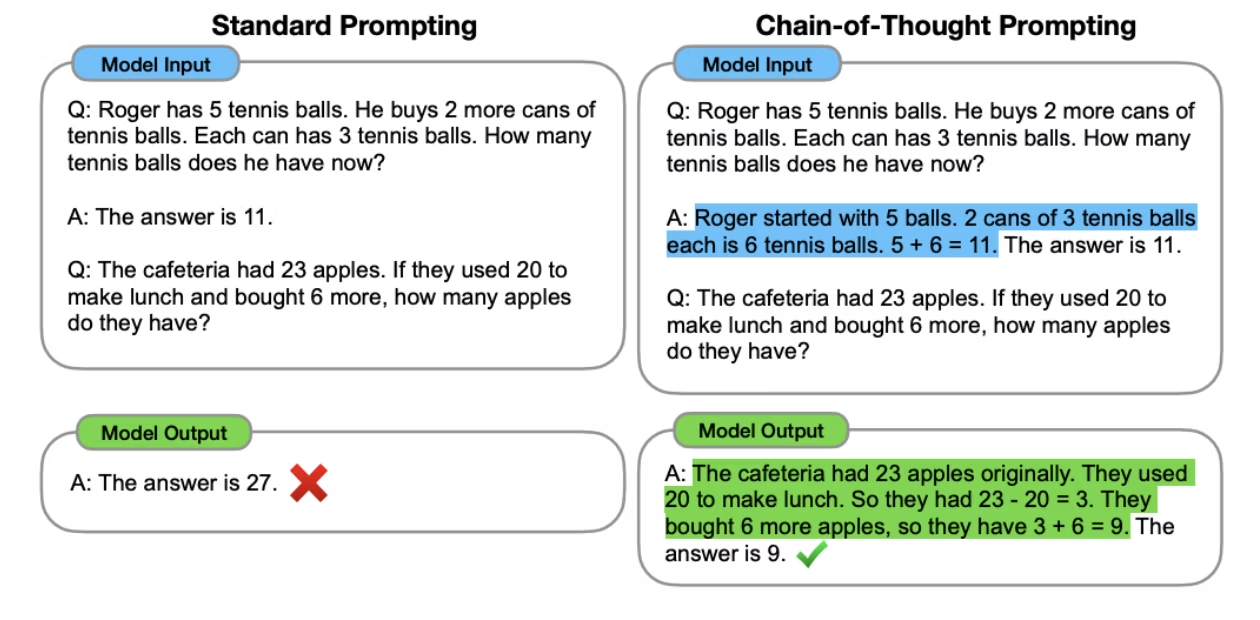

Chain-of-thought-based reasoning:

Of all the interesting emergent properties of LLMs this one is perhaps the most surprising and interesting. For certain complex reasoning tasks, where few-shot examples don’t work, elaborating on the reasoning of how the problem was solved enables Large Language Models to solve them. Here is an example from this paper.

Where LLMs fall short

While these capabilities are amazing and totally unprecedented, LLMs are not perfect reasoning engines. In fact it is very easy to get a response from GPT and other LLMs that is either plain wrong, misinformed, or downright unethical. Here are some examples:

Basic arithmetic operations when large numbers are involved:

Hallucinating answers when it doesn’t know:

The following question is a collatz hypothesis and is yet to be proven or disproved. We know that an LLM cannot suddenly produce a proof for it when it has eluded the most elite mathematicians for more than half a century. Yet, GPT-3 has no problems producing a nonsensical proof for it:

At ThoughSpot, we have been experimenting with GPTs ability to generate SQL from Natural Language. While some of the intelligence it shows in terms of resolving ambiguity based on world knowledge is completely uncanny, it is far from perfect. In particular, once you are dealing with real-world schemas (as opposed to simple toyish schemas) GPT cannot do the SQL translation on its own.

How ChatGPT is different than other LLMs

While there are a number of other LLMs trained using similar-sized data sets, ChatGPT has simply run away with almost all the public attention. There are a few reasons for it:

Reinforcement Learning from Human Feedback (RLHF):

As we have discussed, GPT-3 is simply a language model. While it does a good job of responding to questions and reasoning tasks, it was not designed to do so. Furthermore, it has been trained on textual data from the Internet which does contain substantial amounts of hate-speech. So it is not surprising that with an adversarial (or even an arbitrary) prompt you can get responses that are in poor taste or offensive.

One way to fix this problem is by generating human-labeled training data that tells the model what is a more desirable output. However, it would be impractical to generate training data that is even a tiny fraction of original unsupervised training data and hence less likely to make a dent. Instead, OpenAI researchers trained another neural network from the labeled data to learn human preferences. Now, this new neural network could be used at scale to fine-tune the GPT-3 models to prefer one response over another. This technique is called reinforcement learning for human feedback. OpenAI released a model called InstructGPT which was the precursor to ChatGPT. This additional layer of safety allowed OpenAI to expose the LLMs more freely.

Beyond just improving LLMs with reinforcement learning from human feedback, researchers are exploring how AI models can take more autonomous actions and drive decision-making in real-world applications. This shift toward agentic AI is already happening across industries.

💡Check out these examples of agentic AI in action.

The chat interface:

OpenAI did an extremely simple UX optimization in exposing ChatGPT, which basically remembers previous exchanges with the user in the session and appends that to prompt. This simple UX change made a huge difference in its usability for the general public.

Free online service:

While models like Bloom are available to the public, there is no free resource where you can go and simply play with it by typing your questions. Exposing ChatGPT to the public comes at a significant compute cost to OpenAI, but it also attracts mindshare and provides additional training data from all the interactions people are having with it.

What is the impact of ChatGPT on society?

ChatGPT and other LLMs represent a substantial advancement in the state of the art when it comes to AI’s ability to understand text, synthesize new text, compose ideas, and do reasoning. In its current form it is deeply flawed and a lot of care needs to be taken in its application. Without a doubt, there will be a big wave of products coming soon ranging from assistive writing, code generation, summarization, and more. I compare this to discovering a new element on the periodic table in the sense that it will enable a lot of new things that were not possible before, but only by carefully studying its properties and mixing it with other elements carefully.

From my perspective, it is a significant advancement that will encourage society to be even more invested in the advancement of AI and AI safety. A lot of new products—including Spotter, ThoughtSpot's dedicated AI Analyst—will be built around this innovation and the status quo will be disrupted.

However, in general this innovation will bring more prosperity than harm and surely reduce the amount of tedious work we do every day. I expect LLMs to be no different. In the next article, we will explore the future of AI and trends to look out for in a ChatGPT world.